Next: Remarks on the theoretical

Up: Inviscid Burgers

Previous: Inviscid Burgers

Contents

For the special case

![$ K(t,x)=K(x)=K\mathbbm{1}_{[a,b]}(x)$](img382.png) , where

, where  is a constant and

is a constant and ![$ [a,b]$](img383.png) is a sub-interval of

is a sub-interval of ![$ [0,1]$](img335.png) , we have

, we have

|

(3.45) |

where

|

(3.46) |

is the time during which the characteristic curve

with foot

with foot  of equation (3.39-F) with

of equation (3.39-F) with  lies in the support of

lies in the support of  .

.

The system is then observable if and only if the function  has a non-zero lower bound, i.e.

has a non-zero lower bound, i.e.

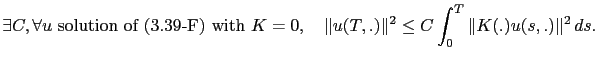

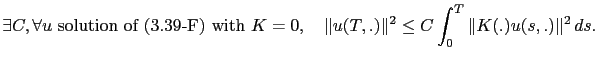

, the observability being defined by (see e.g. [93]):

, the observability being defined by (see e.g. [93]):

|

(3.47) |

In this case, proposition 3.2 proves the global exponential decrease of the error, provided  is larger than

is larger than

, where

, where  is defined by equation (3.41).

is defined by equation (3.41).

From this remark, we can easily deduce that if for each iteration, both in the forward and backward integrations, the observability condition is satisfied, then the algorithm converges and the error decreases exponentially to 0

. Note that this is not a necessary condition, as even if  , the last exponential of equation (3.45) is bounded.

, the last exponential of equation (3.45) is bounded.

Next: Remarks on the theoretical

Up: Inviscid Burgers

Previous: Inviscid Burgers

Contents

Back to home page

![]() has a non-zero lower bound, i.e.

has a non-zero lower bound, i.e.

![]() , the observability being defined by (see e.g. [93]):

, the observability being defined by (see e.g. [93]):

![]() , the last exponential of equation (3.45) is bounded.

, the last exponential of equation (3.45) is bounded.